Text to Video: The Next Leap in AI Generation | Summary and Q&A

TL;DR

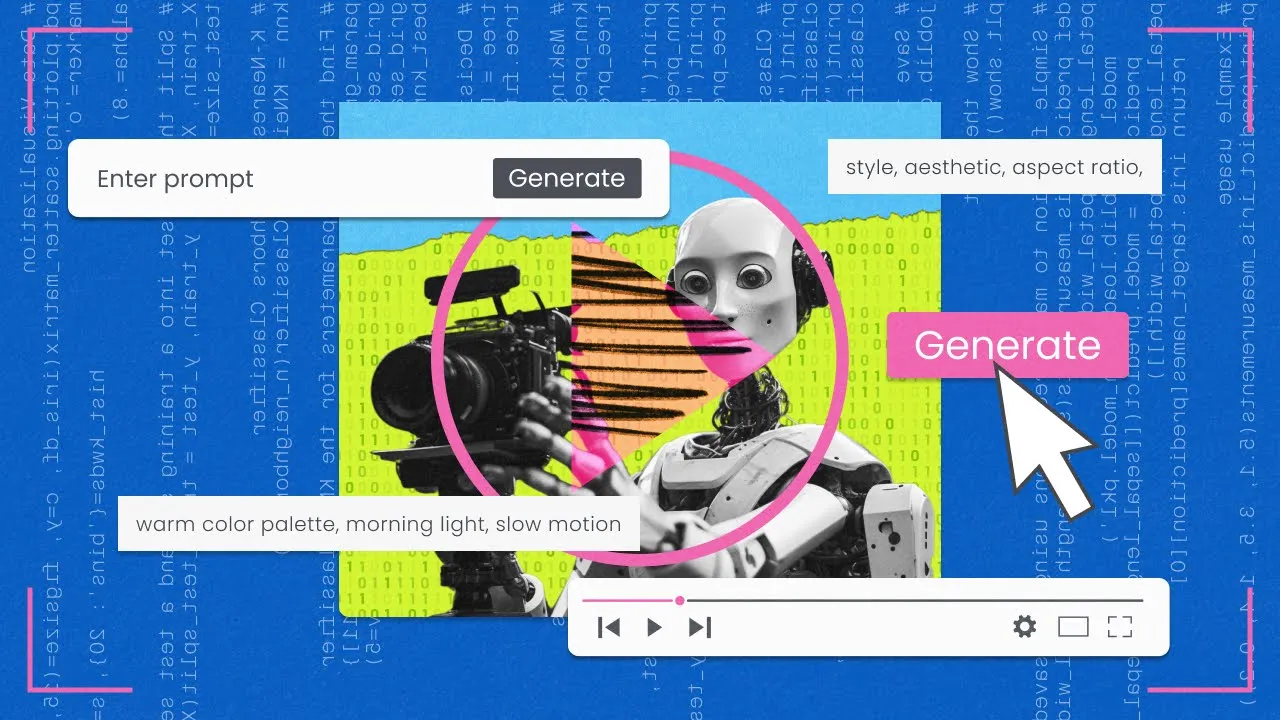

Researchers at Stability AI have developed Stable Video Diffusion, an open-source generative video model that uses text prompts to generate realistic videos.

Key Insights

- 🎮 Stable Video Diffusion is an open-source generative video model that utilizes text prompts to generate realistic videos.

- 🎮 Text-to-video generation poses unique challenges compared to text-to-image generation due to the larger size and dynamic nature of videos.

- 😤 The research team focused on data loading, structural consistency, and incorporating physics and 3D knowledge into the video model.

- 🛟 Stable Video Diffusion has the potential for various applications, including video editing, content creation, and bringing static images or memes to life.

- 🎮 The model's performance can be further improved by generating longer videos, processing longer videos, and adding multimodality and audio tracks to the generated videos.

- 🖐️ Compute constraints and efficiency play a crucial role in driving innovation and finding creative solutions in the field of AI.

Transcript

Read and summarize the transcript of this video on Glasp Reader (beta).

Questions & Answers

Q: What is Stable Video Diffusion, and how does it differ from other generative models?

Stable Video Diffusion is a text-to-video generative model that uses text prompts to generate videos. Unlike other generative models, it incorporates the dynamic nature of videos and requires a deeper understanding of physics and 3D objects.

Q: What challenges did the research team face in developing Stable Video Diffusion?

The team encountered challenges with data loading and processing due to the larger size and dimensionality of video data. They also had to ensure structural consistency and incorporate knowledge about the physical properties of the world into the model.

Q: How did the team approach selecting the datasets for training the model?

The team initially trained an image model to lay the foundation for video generation. They then trained on a large dataset of videos to capture motion and visual understanding and further fine-tuned the model using a smaller, curated dataset to refine its performance.

Q: What are some potential applications for text-to-video AI models like Stable Video Diffusion?

Text-to-video AI models have numerous applications, such as video editing, content creation, virtual reality experiences, and animation. They can also be used to bring famous artworks or memes to life.

Summary & Key Takeaways

-

Stable Video Diffusion is a state-of-the-art generative model that creates videos based on text prompts.

-

Text-to-video generation is more challenging than text-to-image generation due to the larger size and dynamic nature of videos.

-

The research team focused on selecting the right datasets to enable realistic representations of the world in video form.

-

The model has already been successful, and there are many potential applications for text-to-video AI models.

Share This Summary 📚

Explore More Summaries from a16z 📚