Michael Jordan | Summary and Q&A

TL;DR

Big data poses challenges in the field of statistics and computation, particularly in terms of inferential issues, personalized services, and handling large volumes of data. The integration of statistics and computation is essential in addressing these challenges.

Key Insights

- 😃 Big data problems not only involve large volumes and velocities of data but also require addressing inferential issues for accurate analysis.

- 😃 Different types of big data problems require different mathematical and conceptual approaches.

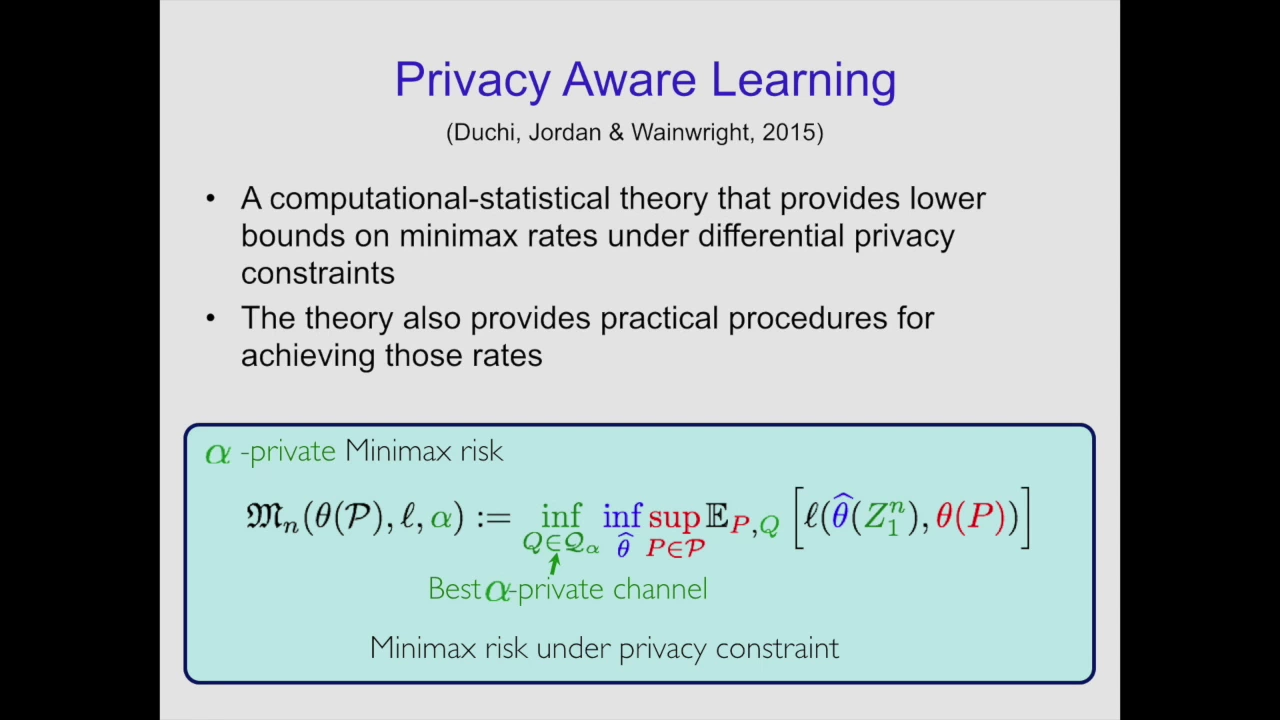

- ☠️ Combining statistics and computation is crucial in addressing challenges related to personalized services, control over error rates, scalability, and privacy concerns.

- 🤢 The bag of little bootstraps method is a useful approach for estimating error bars in the absence of prior information.

Transcript

Read and summarize the transcript of this video on Glasp Reader (beta).

Questions & Answers

Q: What is the main challenge in addressing big data problems?

The main challenge lies in combining statistics and computation effectively to tackle inferential issues, personalized services, control over error rates, scalability, and privacy concerns.

Q: How does inferential thinking differ from computer science?

Inferential thinking goes beyond the mere execution of machine learning algorithms and involves considering sampling patterns, robustness, and making statistical inferences about populations. Computer science, on the other hand, focuses on computational efficiency and worst-case complexities.

Q: What is the bag of little bootstraps method?

The bag of little bootstraps method is a frequentist approach that allows for the estimation of error bars without the need for prior information. It involves resampling from small sub-samples of data multiple times to generate error bars.

Q: How does the Statler Torch method improve on the traditional bootstrap method?

The Statler Torch method introduces the concept of sub-sampling from a small footprint, allowing for efficient parallelization and generating error bars on the correct scale. This approach provides significant improvements in computational efficiency.

Summary & Key Takeaways

-

The speaker discusses the history of big data in various fields, including particle physics and genomics, emphasizing the importance of inferential issues in addition to volumes and velocities of data.

-

The speaker highlights the shift from hypothesis testing to exploring multiple hypotheses in big data problems and the need to consider different mathematical and conceptual approaches for different types of problems.

-

The speaker emphasizes the difficulty of combining statistics and computation to address challenges such as personalized services, control over error rates, scalability, and privacy concerns.

Share This Summary 📚

Explore More Summaries from a16z 📚